Archive for February, 2023

Do We Still Need Green Screen?

Do We Still Need Green Screen?

With the advances in artificial intelligence, post-production rotoscope tools are now so good that some DPs are asking if we still need to use green screen at all, or at least, in quite the same way.

Suddenly, it seems any background can be replaced in seconds, allowing DPs to shoot the most complex compositing assignments faster and more economically. Today’s AI-powered rotoscoping tools are powerful and robustl as producers can repurpose a treasure trove of existing footage packed away in libraries and potentially reduce the need for new production or costly reshoots.

In 2020, Canon introduced the EOS 1D Mark III camera for pro sports photographers. Taking advantage of artificial intelligence, Canon developed a smart auto-focus system by exposing the camera’s Deep Learning algorithm to tens of thousands of athletes’ images from libraries, agency archives, and pro photographers’ collections. In each instance, when the camera was unable to distinguish the athlete from other objects, the algorithm would be ‘punished’ by removing or adjusting the parameters that lead to the loss of focus.

Ironically, the technology having the greatest impact on DPs today may not be a camera-related at all. When Adobe introduced its Sensei machine-learning algorithm in 2016, the implications for Dos were enormous. While post-production is not normally in most DPs’ job descriptions, the fact is that today’s DPs already exercise post-camera image control, to remove flicker from discontinuous light sources, for example, or to stabilize images.

In 2019, taking advantage of the Sensei algorithm, Adobe introduced the Content Aware Fill feature for After Effects. The feature extended the power of AI for the first time to video applications as editors could now easily remove an unwanted object like a light stand from a shot.

The introduction of Roto Brush 2 further extended the power of machine learning to the laborious, time-consuming task of rotoscoping. While Adobe’s first iteration used edge detection to identify color differences, Roto Brush 2 used Sensei to look for uncommon patterns, sharp versus blurry pixels, and a panoply of three-dimensional depth cues to separate people from objects.

Roto Brush 2 can still only accomplish about 80% of the rotoscoping task, so the intelligence of a human being is still required to craft and tweak the final matte.

So can artificial intelligence really obviate the need for green screen? In THE AVIATOR (2004), DP Robert Richardson was said to have not bothered cropping out the side of an aircraft hangar because he knew it could be done more quickly and easily in the Digital Intermediate. Producers, today, using inexpensive tools like Adobe’s Roto Brush 2, have about the same capability to remove and/or rotoscope impractical objects like skyscrapers with ease, convenience, and economy.

For routine applications, it still makes sense to use green screen, as the process is familiar and straightforward. But the option is there for DPs, as never before, to remove or replace a background element in a landscape or cityscape where green screen isn’t practical or possible.

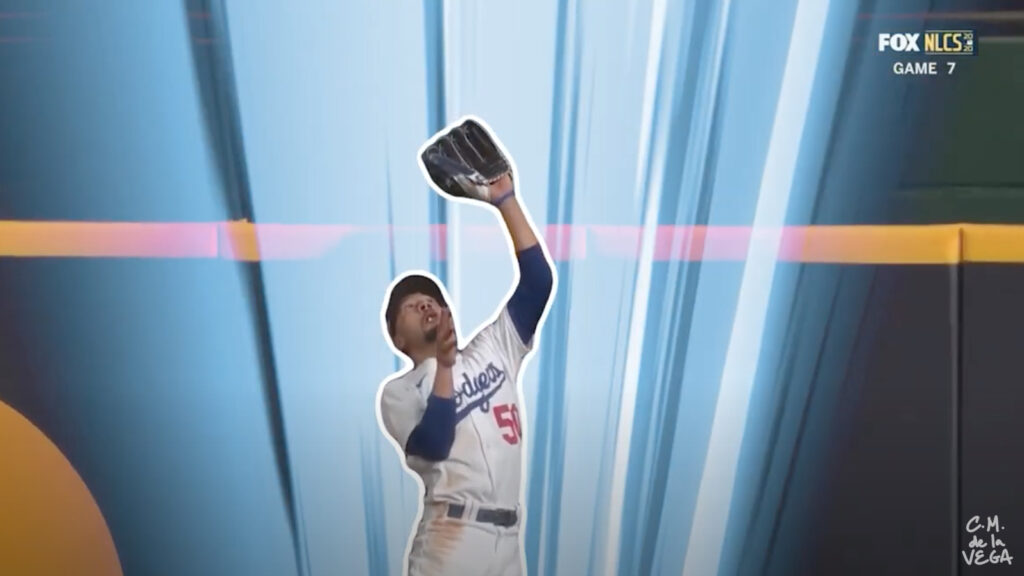

Can AI-powered rotoscoping tools like Adobe’s Roto Brush really replace green screen? Some DPs think so, especially in complex setups such as many cityscapes.

Roto Brush has learned to recognize the human form, and is thus able to isolate it, frame by frame, from a background. But even with the power of AI, Roto Brush still requires some human input.

[Screenshot from CM de la Vega ‘The Art of Motion Graphics’ https://www.youtube.com/watch?v=uu3_sTom_kQ]

.